Comparing the cost of running Epic on-premises versus in the cloud is difficult because migrating to the cloud changes the basic business model for your IT infrastructure.

An on-premises deployment involves substantial capital investment in hardware such as servers, storage systems, and networking gear – all of which must be refreshed every three years or so. You also have software expenses for virtualization, various middleware packages, security and monitoring tools, and standard operating systems such as Red Hat Enterprise Linux and Microsoft Windows. These capital costs must be amortized over some reasonable period of time to get a clear picture of what it costs to operate your own data center per day or month.

In addition, if you maintain your own datacenter facility, then you know the wide range of costs involved, from building the facility in the first place to ongoing utilities to physical security. Using a colocation facility simplifies the cost model somewhat in that you pay just for the amount of space used measured in rack units or square footage. But choosing a colocation facility is tricky, because location plays a key role in colocation pricing. Opt for the downtown corridor and you’ll pay more than if you choose a more remote region. Alternatively, you can pick a location that is more remote, which saves money due to less expensive real estate. However, those savings can be eaten up in higher rates for bandwidth due to the longer distances involved and lack of connectivity options. However it’s done, these operating expenses also go into the mix.

By comparison, the cloud pricing model is extremely straight-forward – you pay for the services you use on a per-cycle, per-byte, or per-packet basis. There’s almost no capital investment required, so the bulk of the expenditures fall under the category of operating expenses. This model allows your organization to more closely correlate resource costs with revenues generated, since they tend to track each other in a cloud model.

Why Run Epic on AWS?

Running Epic on AWS is helping healthcare businesses reduce the costs associated with data center management, deploy Epic releases faster, and improve business continuity and disaster recovery. This allows them to focus more resources on improving care quality for the patients they serve.

Epic on AWS: Scenarios that Deliver Cost Savings

From the preceding discussion, it’s clear that trying to compare on-premises costs to AWS costs is problematic just because of the different business models. Fortunately, there’s another way to approach the question of cost savings, that is, by looking at specific scenarios. Here are three real-life examples of ways that you can save money – and realize other benefits such as time to market and productivity – by migrating specific parts of your Epic deployment to AWS.

1. Self-Service Dev/Test Environment

Epic developers live under constant pressure to speed up their work and get new products and services to market faster. To ensure that their prototypes will work in the real world, developers must have a computing environment that closely resembles the production environment. And therein lies the problem. Buying hardware and software for the dev/test environment that looks exactly like their production counterparts is safe but expensive, and that investment rarely runs at full utilization. If you try to make do instead with older hardware that has been cycled out of the production environment, you may compromise the development process as well as the reliability of test results.

Migrating your Epic dev/test function to AWS fixes this problem. Using self-service portals, developers can literally spin up a dev/test environment in minutes. For governance purposes, they usually must follow a specific process of internal approvals, but the time involved is a small fraction of the time required to procure dedicated hardware and software. Once the dev-test environment is no longer needed, the developer can easily and quickly tear down the setup – and the cost clock stops running right away. In most cases, this on-demand model is far less expensive than having dedicated dev/test hardware and software on premises.

This approach also ensures that developers always have access to the latest technology because AWS continually invests in emerging technology advances to give its customer a competitive edge.

2. Pilot-light DR System

There’s an old joke that goes, “What is the ROI on a parachute?” The answer is zero – until the plane starts going down, when the ROI suddenly becomes infinite. CFOs can be forgiven for seeing their DR systems in much the same light. The traditional way to implement a disaster recovery system is to replicate the production environment in a remote location that minimizes the chances that both could be impacted by the same natural or human disaster. That method is technically sound but results in resources sitting idle and depreciating without delivering value.

Many organizations are moving away from the traditional DR approach for their Epic deployments to a “pilot light” approach. (There are other approaches to Epic DR which you can learn more about here.). The term refers to the small flame that is always lit in natural gas furnaces so that you can quickly ramp up the heat when needed.

In the DR context, a pilot-light environment in a remote location contains the basic components of each workload including configuration and data, running at a low level of resource usage. This approach keeps on-going expenses low – because you’re only paying for the small amount of pilot-light resources in use – but allows you to quickly scale up in the case of a disaster event that fails over to the DR site.

AWS has essentially infinite resources, so it’s just a matter of minutes before your fail-over site is fully operational and offering similar levels of performance as the now out-of-action primary environment.

The savings here can be substantial. In one real-world case, the pilot-light approach reduced the number of active virtual machines in the DR facility from 105 to just 12, an 89% reduction (see the Cloudticity solution brief “The Nuts and Bolts of Running Epic on AWS.”)

Read the full white paper: Designing Your Epic Disaster Recovery on AWS.

3. Dynamic Training Resources

Training traditionally has a low overall utilization rate of resources for several reasons. On-premises training facilities are provisioned for the largest possible class size, so unused capacity is a virtual certainty during training sessions. In addition, training groups can rarely schedule their classrooms five days a week, 52 weeks a year, so idle periods are built into the process. Empty training rooms with a computer on every desk are a common sight, and not a pretty one for corporate executives concerned about return on investment.

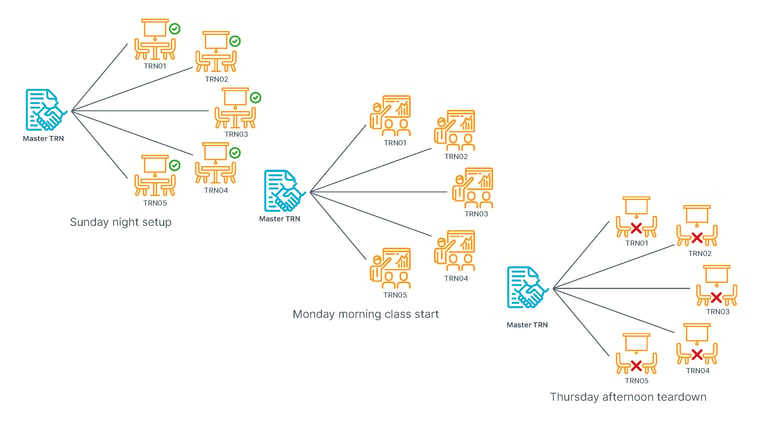

The inherent flexibility of cloud computing is a natural fit for the uncertainties of training curricula. In a cloud-based training system, on Sunday night the instructor can easily and quickly provision the exact number of training instances needed for the Monday morning session using a purpose-built script. When the class ends on Thursday afternoon, the instructor runs another script that tears down all the student instances, effectively turning off the cost tap (see figure 1).

Another benefit of using AWS for Epic training is access to the most up-to-date technology. It’s not uncommon for organizations to populate their training rooms with older computers, the thought being “it’s good enough for training.” However, this approach runs the risk of shortchanging the trainees who eventually will have to learn how to do their jobs in the current production environment, not last year’s. In contrast, AWS stays up with the technology, so Epic training can use the same AWS instance classes as other parts of th

Figure 1. Training Instances Automatically Set Up and Torn Down as Needed

Summary

While it’s difficult to provide a quantitative analysis comparing the costs of running Epic on-premises versus on AWS, looking at specific scenarios leaves no doubt that those savings are real and substantial. You can learn more about running Epic on AWS at www.cloudticity.com/epic. Or watch our on-demand webinar "Beginning Your Epic to the Cloud Journey."

Does Epic run on AWS?

Epic Systems software can be run in whole or in part on the public cloud infrastructure operated by Amazon Web Services (AWS). Many organizations begin their Epic migration to AWS by migrating one or more specific functions such as disaster recovery, dev/test, or training. This approach provides significant cost savings, and allows your staff to get up to speed on AWS.

What are the benefits of running Epic DR on AWS?

Many organizations realize significant savings by transitioning from a traditional DR system to a pilot-light DR system on AWS, while maintaining the ability to meet recovery time and recovery point objectives.

What are the benefits of running Epic training on AWS?

Migrating Epic training to AWS eliminates the need to invest in hardware and software for the training lab. Training managers can spin up just the right number of computing instances for training and decommission them when the training session ends, minimizing costs.

What are the benefits of running Epic dev/test on AWS?

When Epic dev/test is migrated to AWS, developers gain the ability to self-provision the development and testing resources they need for just as long as they are needed, saving money and ensuring that developers always have access to the latest data center technology.

Discover More

Want to learn more about running Epic on AWS? Download the solution brief, “Epic on AWS Technical Overview.” Or schedule a free consultation to learn how Cloudticity and Sapphire Health Consulting can help you modernize your Epic deployment on the cloud.