The explosion of large language models (LLMs) like ChatGPT onto the scene happened a little over a year ago, and since then it has become clear that the underlying technology can be deployed for everything from marketing and education to music synthesis and protein discovery.

It seems like language models are everywhere today, but as security-focused professionals dealing with sensitive data, we face many unique challenges protecting Artificial Intelligence (AI) against AI security threats. It’s one thing to train an AI math tutor, and quite another to have an algorithm handling extremely sensitive data, like personally identifiable information (PII) and protected health information (PHI).

Nevertheless, there are best practices around AI and LLM security that you can employ to reduce business risk and keep hackers away from sensitive data, while utilizing these nascent technologies. Here are eleven security best practices from our IT security experts for protecting AI workloads.

Best Practices for Generative AI Security

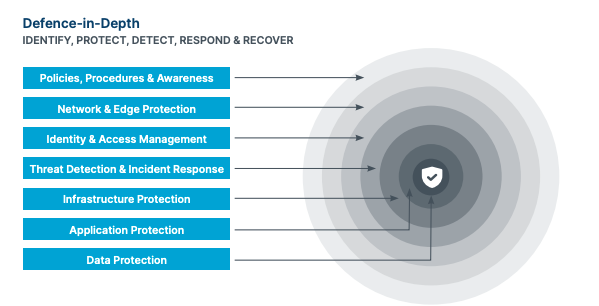

1. Apply Security to Every Layer

The first best practice applies to basically any situation, and it’s the injunction to utilize sound security protocols for all the layers of your project, including:

- Data Protection: We’re trying to protect the data at the lowest possible level. We want to employ security at every layer above that to detect security incidents and respond quickly and effectively. For data protection, we want to ensure that data is encrypted in transit and at rest and employ least-privilege access policies on resources that contain sensitive data.

- Application Protection: The next layer is the application layer, which addresses the applications communicating with the data. This involves ensuring that the people who are accessing that information have the right user and access permissions.

- Infrastructure Protection: In an on premise data center this means securing your perimeter and access to servers. If you’re using a public cloud provider, your vendor will handle much of the infrastructure security, but you still have to manage how that gets deployed and how you allow access to those maintaining it.

- Threat Detection and Incident Response: You have to ensure you have monitoring in place to catch any threat that comes your way and respond to it promptly.

- Identity and Access Management: How do you allow appropriate level access to people accessing company resources?

- Network and Edge Protection: When and how do you allow third parties to communicate with your infrastructure and our network?

- Policies, Procedures, and Awareness: How you understand and create guardrails that restrict how application access is done.

With generative AI, you’ll also want to broaden your concerns to include any data being fed to the model and any output generated by the model, as either could contain PII. This is very important, because once a model has seen PII, it becomes all but impossible to ensure that this PII doesn’t show up in a later response.

2. Use a Policy of Least Privilege

In information security, the “principle of least privilege” refers to the idea that a person, application, or entity should only be given access to the specific data they need to do whatever work they need to get done. Instituting this as a policy – i.e. as a “policy of least privilege” – does a lot to improve security posture, as it means that your attack surface is dramatically reduced and it’s much harder for ransomware or malware to spread throughout your organization.

3. Use Multiple Accounts

If using a public cloud service provider, which is what we recommend, use multiple accounts for authentication purposes. No one should have access to everything, as this introduces a single point of failure that could compromise your entire technology stack if breached.

4. Isolate Network Resources

You should also isolate your network resources, for the same reason that you should use multiple accounts. This means preventing (or otherwise carefully controlling) your model from being accessed from the general internet.

If you’ve spent any time on the internet, you know that some of those using it aren’t exactly upstanding model citizens. When they get access to any new technology, including your generative AI offering, they’ll attempt to jailbreak or otherwise misuse it. This is a misstep you can’t afford when working with sensitive data.

5. Encrypt Everything

Another fairly basic modification you can make to improve your security posture dramatically is to encrypt everything – the data the model is trained on, any messages being sent to it, any text it’s generating, etc.

Encrypt at rest and encrypt in transit. One subtlety worth mentioning is that you must ensure that the encryption standards used across the stack – between components and plugins, between users and the model, etc. – should be consistent. Without consistency, you could get in a situation where a dev environment has PHI in it. This may sound fine, but the dev team is likely to spin up instances that they may or may not remember to shut down or fully protect. This creates an easy place for an attack. To combat this, encrypt sensitive data all the time, or use synthetic data in development.

6. Enable Traceability

Traceability refers to your ability to tell who is interacting with your application, at what point they entered (i.e. whether they hit the API or accessed your infrastructure directly at some point), and what they did while they were there. This will facilitate monitoring any changes to the model or its scaffolding, and make it easier to identify any potential attacks or problems.

7. Centralize Findings

If you’ve enabled traceability, used a policy of least privilege, or taken any of the previous steps, you should now have a monitoring system that generates information related to what’s happening inside your application. Whenever possible, bring all this data together in one place. This will allow you to quickly assess activity occurring along any attack vector; you’ll be able to understand whether an attack consisted of one event or multiple related events, whether it originated with one account or several, etc.

All of this is crucial for mitigating damage and closing off those weaknesses in the future, but by default, the relevant data tends to be scattered across different logging tools. One advantage of implementing a security lake is that you can centralize these findings, but, as is usually the case, there are other ways to skin this particular cat.

8. Keep People Away From Data

You may have heard the phrase “information wants to be free”, which refers to a general philosophical openness for data. Where security is concerned, however, this won’t do because some people want to use data for nefarious ends – and this is especially true of valuable PII data.

Once you’ve centralized your findings following the previous best practice, you must be very careful about who will see those findings. Not only does excessive openness mean a great threat surface, it also makes it harder to find and patch vulnerabilities if you do experience some kind of breach.

You should only grant data access to people who really need it, and even then, they should only get access to what they need, when they need it, to do their jobs correctly.

9. Automate Security Best Practices

Even with the best monitoring and alerts, you’ll still need security automation in place. This means, for example, setting up systems that automatically implement network protections and policies when someone is deploying something new in your environment.

You also need to automate incident response. You could have alerts going off, for example, telling you that someone is inside of your network trying to get access to your data. Still, if you don’t have a way to respond and contain that type of attack automatically then you’re at the mercy of employees, hoping someone will see the alert and respond correctly before the attacker has time to exfiltrate any data.

10. Prepare for Security Events

Finally, you must create a regular cadence for testing your systems and teams under different security scenarios. You should be checking to make sure your automation holds up, that the relevant people are responding correctly, and, in general, the things you expect to happen are actually happening.

All the best practices in the world won’t help you if you’re caught totally unprepared when a threat emerges, so you need to be consistently testing to make sure you’re ready if it does.

11. Use Multifactor Authentication (MFA)

MFA is one of the easiest ways to ensure that sensitive data are being accessed by appropriate individuals. It’s usually not hard to create and enforce a policy of using MFA across your infrastructure, and you should be doing this to protect yourself as much as possible when using generative AI..

Your MFA policies should extend not only to people within your team, but also to customers, patients, and partners. This is one of the best ways of preventing malicious actors from infiltrating your systems by pretending to be the people you’re serving.

Secure AI Solutions in Healthcare Today

In today's fast-paced market, there's pressure to integrate generative AI solutions into products and services. In healthcare, we can use this technology to improve the patient experience, reduce the administrative burden on clinicians, and stay in the market. And while it's tempting to jump in with a gusto, you'll want to make sure you're considering security first. After all, you only have to look as far as the daily news to understand the magnitude of the threat of healthcare data breaches for organizations.

Understanding the top AI security threats, and following the above best practices, is a great place to start.

But security isn't the only roadblock to AI and LLM adoption in healthcare. You also have to make sure your data is ready for AI, and you need an adoption strategy and roadmap. To learn more download the FREE Guide, Getting Started with Generative AI in Healthcare.

Or schedule a FREE consultation to learn how we can help.